Emergence of PLG

For the past two years I was working in a high-growth, boot strapped, e-commerce company. How big? Wish I was allowed to say, but let’s just say it was substantial. It seems that during this time, with my head in proverbial the e-commerce sand, an emerging terminology has come to the forefront of SaaS companies: Product Lead Growth, or PLG.

What is PLG?

Admittedly, I didn’t understand it at first. What was this elusive PLG, and why was the market abuzz? After I read a few articles, I came to interpret PLG as a self-service company that also has product-market fit, and thus is able to onboard many customers in a frictionless way without a sales-assisted motion. Then it hit me, this is what DigitalOcean is, and was, all about. A simple, frictionless experience for developers to buy cloud servers. Also connected to PLG, I think that any e-commerce company that has an obsessive focus on top of funnel optimization, and site conversion, is doing PLG as well. So in my combined last two roles, and I have about 8 years of experience with PLG 🙂

My Expertise: Building teams at a PLG Company

This lead me to realize that my expertise is in building post-sales* teams at PLG companies, across Operations, Success, Support, and Trust & Safety. To me, the post-sales work can be thought about in 3 ways:

- Proactive: Generating an outbound contact for the purpose of selling more, retention, or to further a relationship (Customer Success).

- Reactive: When there’s friction or issues in the experience that cause an issue/ticket/report (Support, Trust & Safety)

- Efficiency-Driven: Developing a more efficient process or method to add business value (Operations)

TLDR for Executives & Operators

Inherently, none of these activities are unique to PLG. The distinction comes in the cross-functional relationships and processes that you build. For example, take a user-submitted bug report submitted to Support, that is verified and then fixed by an engineering team. This process sounds very simple, IFTTT-driven, but it is actually very complex and vital in a PLG company. My rough estimate is that I’ve been at companies that have onboarded and served >1 million customers, so I’ve had the chance to build, tear-down, and rebuild loads of the processes. Here are some non-obvious takeaways from those experiences:

- The best companies create processes that have a short time to acknowledge an issue, and this quick reaction will be positively noticed by your customers. I don’t care as much about the time to fix an issue because it’s less related to cross-functional processes, and could be a signal of other issues (hiring, eng prioritization, etc.).

- Keep a scorecard of your victories. Everyone keeps a backlog of work to do, but I’ve seen fewer teams keep a list of wins, AKA: product improvements that were driven by customer feedback. Review these with your teams, and celebrate the teams who ship for your customers, it will go along way to building relationships.

- Teach your post-sales teams how to say “no”. To do any type of post-sales work requires empathy, and a lot of support teams acutely feel a customer’s pain, which makes them effective in their role. The best support teams have empathy AND a strong sense of what the business might need, so they’re able to say “no” to requests that don’t align and they can offer an alternative. One report from a VIP customer vs a report from a novice are not created equally. Learning to tell the difference is a learnable and teachable skill, and the quality of customer feedback in your organization is incumbent upon the leader to create that clarity.

- It’s everyone’s role to care about the customer’s experience. If you’re interviewing at a company that puts all the responsibility for customer happiness on post-sales then you should run for the hills. Instead, find a company where every employee acts in the best interest of the customer and make sure to get examples. It needs to be part of a company’s DNA. Data Grouping...

So that’s about it. Some learning, a personal realization, and some lessons. What do you think I missed, got right, or got wrong?

*Post-Sales: For the vast majority of customers, we actually did no selling, and our interactions were overwhelmingly with existing customers, less than 5% were pre-sales. It’s also interesting to note that sales did not work (at all) for DigitalOcean while I was there, and at Wild Alaskan, the majority of pre-sales questions could be handled via self-service and product improvements.

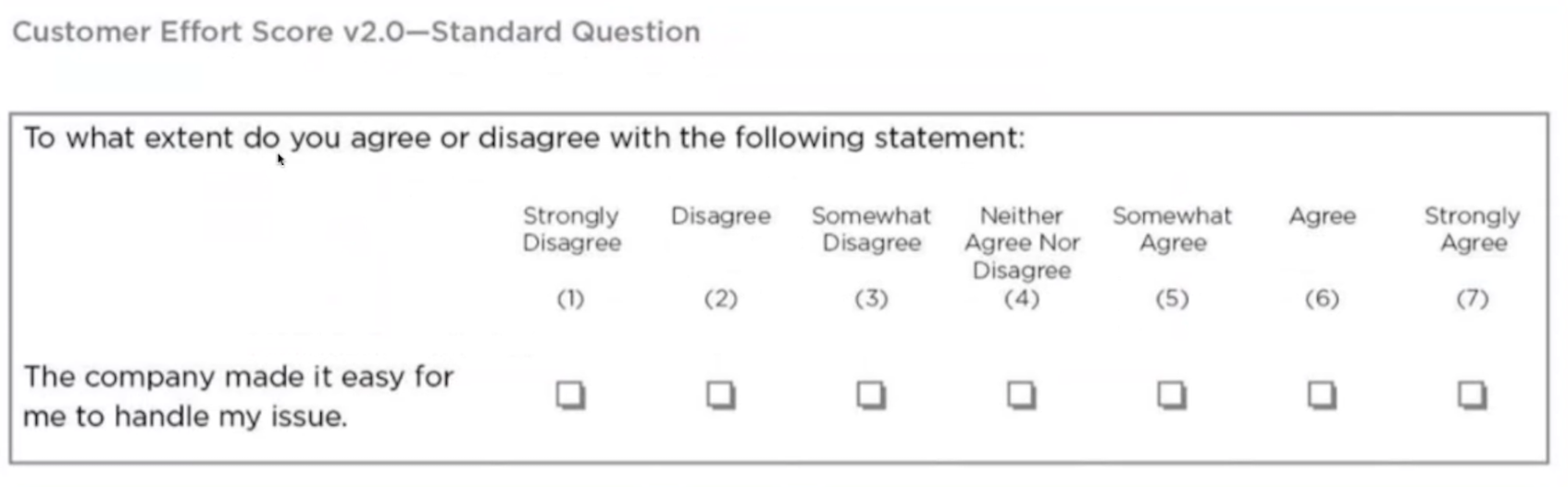

First CES Survey Version from January 2016

First CES Survey Version from January 2016

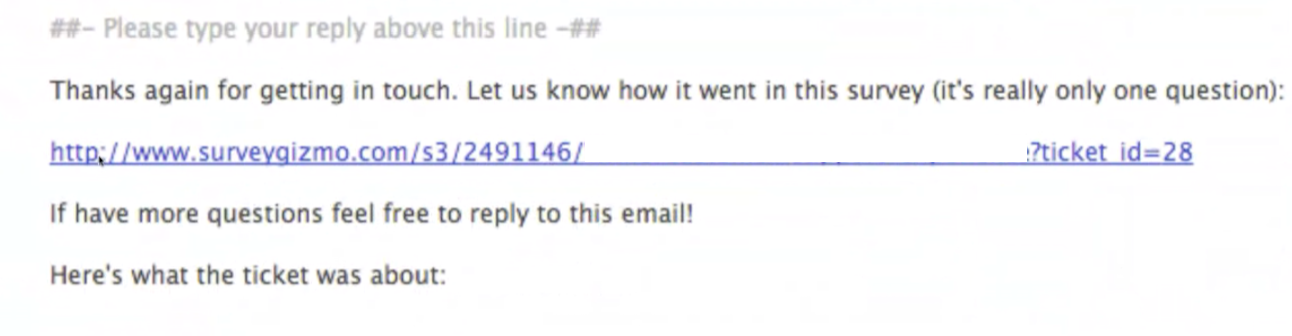

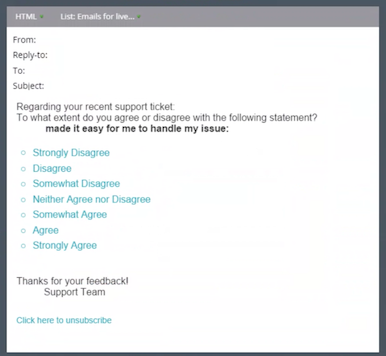

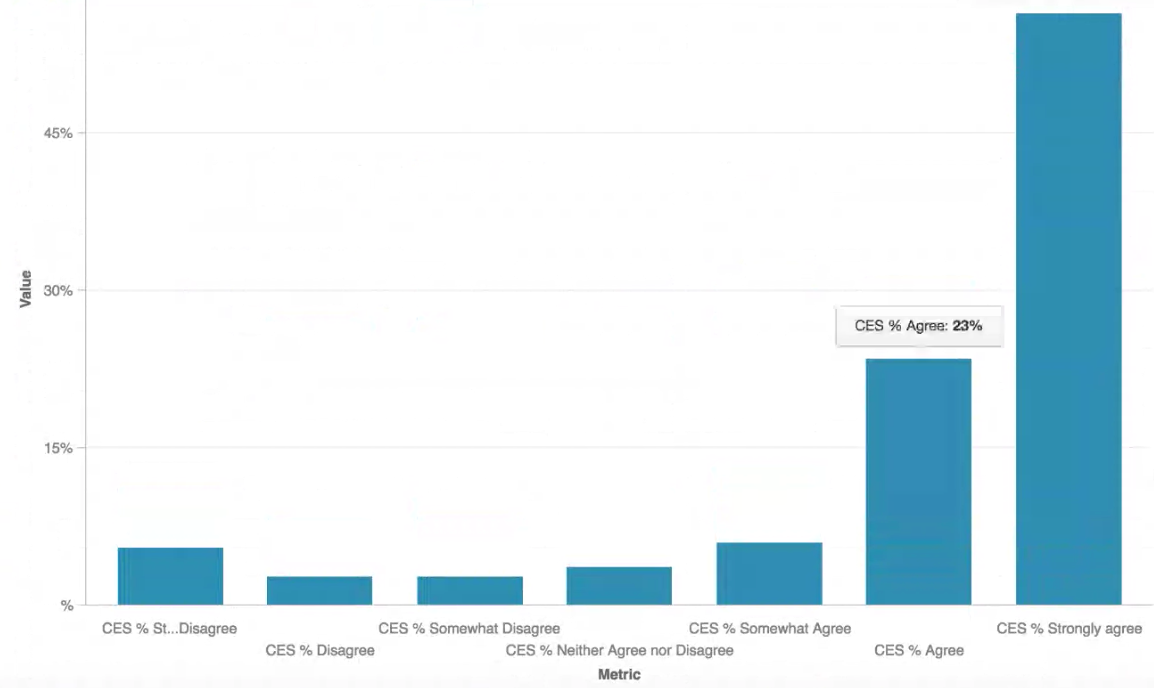

Example of how to capture comments in a custom-built tool

Example of how to capture comments in a custom-built tool Sample CES Dashboard

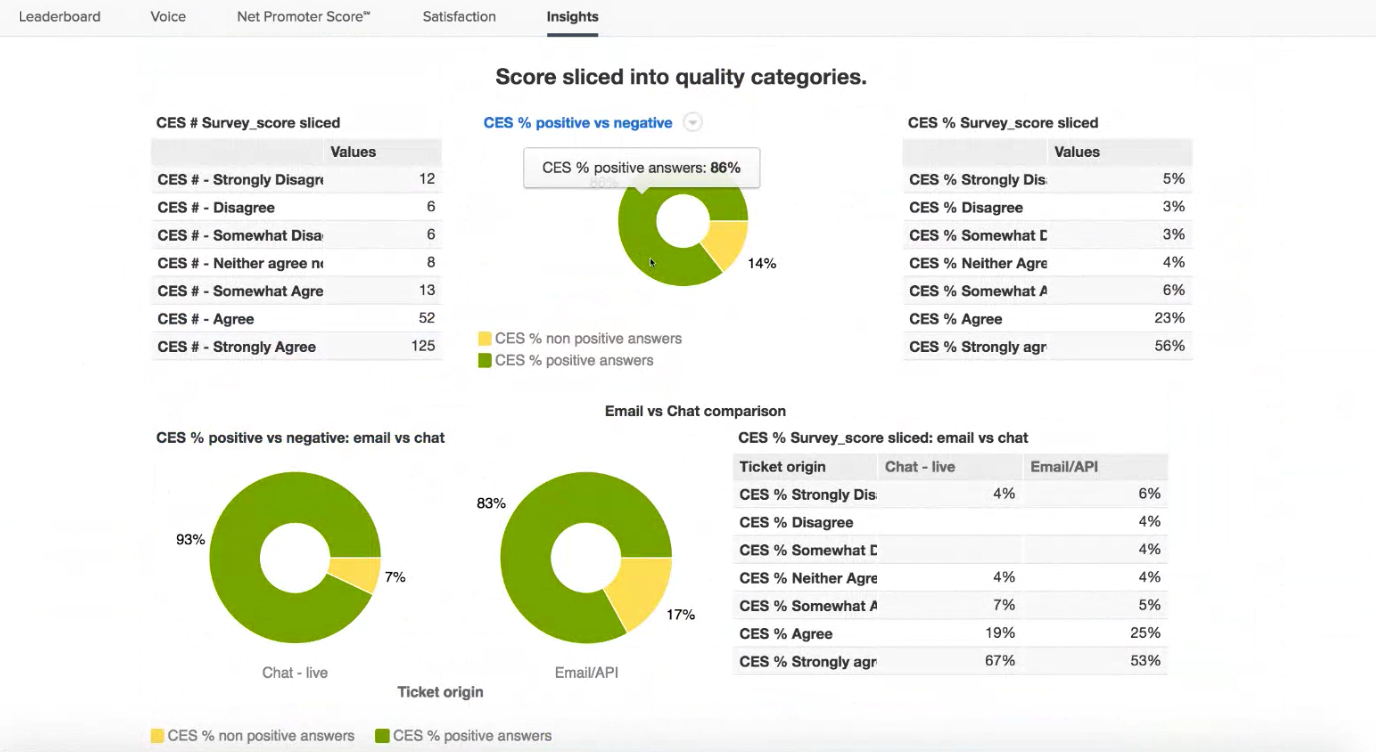

Sample CES Dashboard Sample CES Dashboard in GoodData

Sample CES Dashboard in GoodData